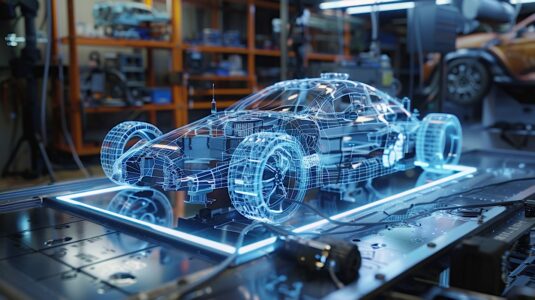

A team of researchers with NVIDIA, led by Stan Birchfield and Jonathan Tremblay, have taken it upon themselves to teach a robot to complete tasks through machine learning. The experiment attempts to teach the robot by programming it to observe human interactions and movements to “learn” how to perform the same actions itself. The tactic was tested in real world circumstances that including having a Baxter robot stack colored cubes. The findings were published in a report titled Synthetically Trained Neural Networks for Learning Human-Readable Plans from Real-World Demonstrations.

The experiment is intended to gauge how humans may work alongside robots, as well as how safely and efficiently they can do so. “In order for robots to perform useful tasks in real-world settings, it must be easy to communicate the task to the robot; this includes both the desired end result and any hints as to the best means to achieve that result,” said the team of six authors in their report.

To test their technique, a camera was set up to provide a live video feed of a simulated scene where objects’ positions and relationships were inferred in real time by a pair of neural networks. The camera recorded a person performing a series of actions and fed that information to another network. This second network then mapped out a plan for re-creating those relationships and movements. A final, execution network read the plan and generated actions for the robot. Amazingly, the robot was able to learn the task through a single demonstration.

“Industrial robots are typically all about repeating a well-defined task over and over again,” said Frederic Lardinois of TechCrunch. “Usually, that means performing those tasks a safe distance away from the fragile humans that programmed them. More and more, however, researchers are now thinking about how robots and humans can work in close proximity to humans and even learn from them.”

According to Birchfield, the researchers wanted to simplify robot training so that it could be accomplished by experts and novices alike. The researchers used mostly synthetic data from a simulated environment, which allowed them to quickly train the robots. “We think using simulation is a powerful paradigm going forward to train robots to do things that weren’t possible before,” he said.

According to NVIDIA’s Senior Director of Robotics Research, Dieter Fox, the goal of the team was to enable the next generation of robots to work in close proximity to humans while maintaining safety. This research and others will go a long way in teaching robots to aid and assist humans in any number of situations, whether at work or at home. The team will now work toward increasing the range of tasks which can be learned and performed by the robot, while also expanding the vocabulary necessary to describe those tasks.